Calculating Perseverative errors WCST

Hi,

I am extremely impressed by the OpenSesame WCST tutorial https://osdoc.cogsci.nl/3.2/tutorials/wcst/

but I have found it too complicted to work out how to calculate persevaritve erros.

I found a post that provided instructions for calculating number of categories achived https://forum.cogsci.nl/discussion/6650/wcst-scoring but persevartive erros are a frequently used and useful measure in schizophrenia research which suggests a failure to update the sort rule in working memory.

To caluclate persevaritve errors, I need to know what sort rule participants are currently using on each individual trial.

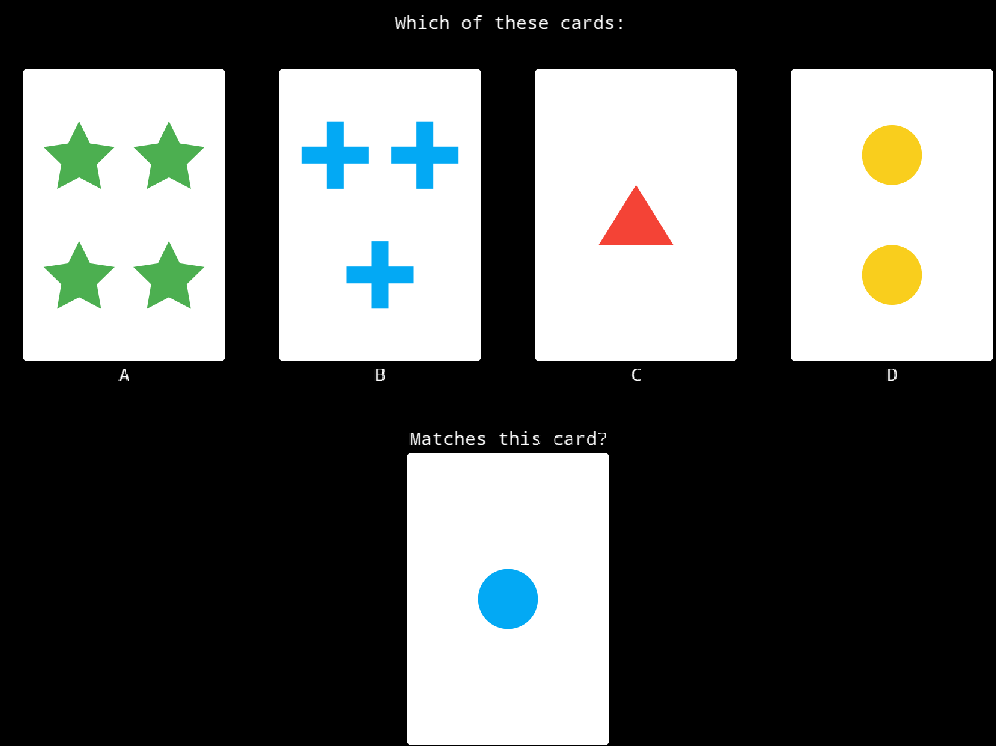

For the example below,

If particiapnts choose B then they are sorting by colour

If particiapnts choose C then they are sorting by number

If particiapnts choose D then they are sorting by shape

If particpants choose A then they don't understand the task

I have tried to get me head around this and I don't even now if it is possible to calculate persevartive errors using this implementaion of OpenSesame.

Any advice would be greatfully received.

Many Thanks

Deiniol

Comments

I've had a go at this. I declared a variable to log participants current matching rule (part_matching_rule) at the start of the experiement in the prepare phase of an inline java script element.

I then used a bunch of if else statments that compares the properties of the card to be sorted (response_ shape, response_color and response_number) with the properties of the 4 cards to match (shape 1-4, colour 1-4 and number 1-4) and with the response (a, b, c , d) in the run phase of an inline java script element (update_part_matching_rule). This java script element was placed at the end of the trial sequence.

Here is the script, which works on the first trial but doesn't change therafter. Do I need to reset the variable at the start of the trial?

I am pleased to get this far. Any help would be greatfuly received.

Many Thanks

Deiniol

Hi Deiniol,

If I understand correctly, perservative errors would be correct responses if the previous rule still applied? Couldn't you then use the same procedure you use to set the correct response for setting perseverant errors, but instead the current sorting rule, you use the previous one?

Attached an example experiment (I downloaded it from OSF, not sure it is the same you have). I added two items, one to calculate the "correct" perseverant response, and a feedback item for that response. In the init js_item I initialized that variable, and in update switch rule, I also updated the previous switch rule.

Does that script make sense?

Eduard

Many thanks @eduard,

I am not the most logically minded and your code works.

I built the experiment using the online tutorial, thn got the complted version to help with the more ocmplicated aspoects. Thsi is probably the most complicated experiemnt I have tried to develop and I am learning alot.

Just have one question. The pers_error variable returns a yes or no rather than a 1 and 0. Is this because pers_error is calclulated by comapring to string responses? (vars.pers_error = vars.correct_pers_response==vars.response

Hi,

I have seen this before a couple of times, and honestly, I don't have a clue why some comparisons return yes/no while others return 0/1 (so true/false). So, also here, I don't know. The more explicit way would be:

if vars.correct_pers_response==vars.response: vars.pers_error = 1 else: vars.pers_error = 0Good luck,

Eduard