Video timing and RT precision at 25 FPS on a 60 Hz display (OpenSesame / Pygame)

I am developing an experiment in OpenSesame that presents short video stimuli using Pygame. Because accurate stimulus timing and reaction time measurement are critical for my study, I would like to ensure that my current implementation achieves sufficient temporal precision, especially given potential future applications in EEG.

OpenSesame version

[e.g. 4.1.0]

Operating system

[Windows 10, Display refresh rate: 60 Hz]

Backend

[legacy]

Expected behavior

- Fixation cross: 500 ms

- Video stimulus:

- Two videos were presented left and right of the center scree

- Duration: 1 second

- Implemented as 25 frames (intended 25 FPS, 40 ms per frame)

- Frames are drawn manually using the Pygame backend

- Participants are allowed to respond during video playback

- If a response occurs, the video is immediately terminated

- Blank screen response window:

- Duration: 800 ms

- Used only if no response occurred during the video

Reaction time is always measured relative to the onset of the first video frame.

Actual behavior (what goes wrong)

The experiment appears to run as intended at the behavioral level. However, because the video is presented at 25 FPS on a 60 Hz display, I would like to confirm whether my implementation is correct and whether the resulting stimulus timing is sufficiently precise, especially if I later extend this paradigm to an EEG experiment.

Specifically, I would like to make sure that:

- The code is correct in general.

- I implemented the video presentation by following the official OpenSesame tutorial:

- Reaction time (RT) is recorded correctly.

- I intend to record RT relative to stimulus onset, defined as the moment when the first video frame is flipped to the screen. To do this, I record

clock.time()immediately after the firstpygame.display.flip()call and compute RT relative to that timestamp. - The actual presentation time of each video frame is correct and well-defined.

- Given that my monitor refresh rate is 60 Hz, I am confused about:

- how long each frame is actually presented on the screen when targeting 25 FPS, and

- whether the resulting timing variability (due to refresh-rate quantization) is acceptable for EEG experiments, where precise stimulus onset timing is critical.

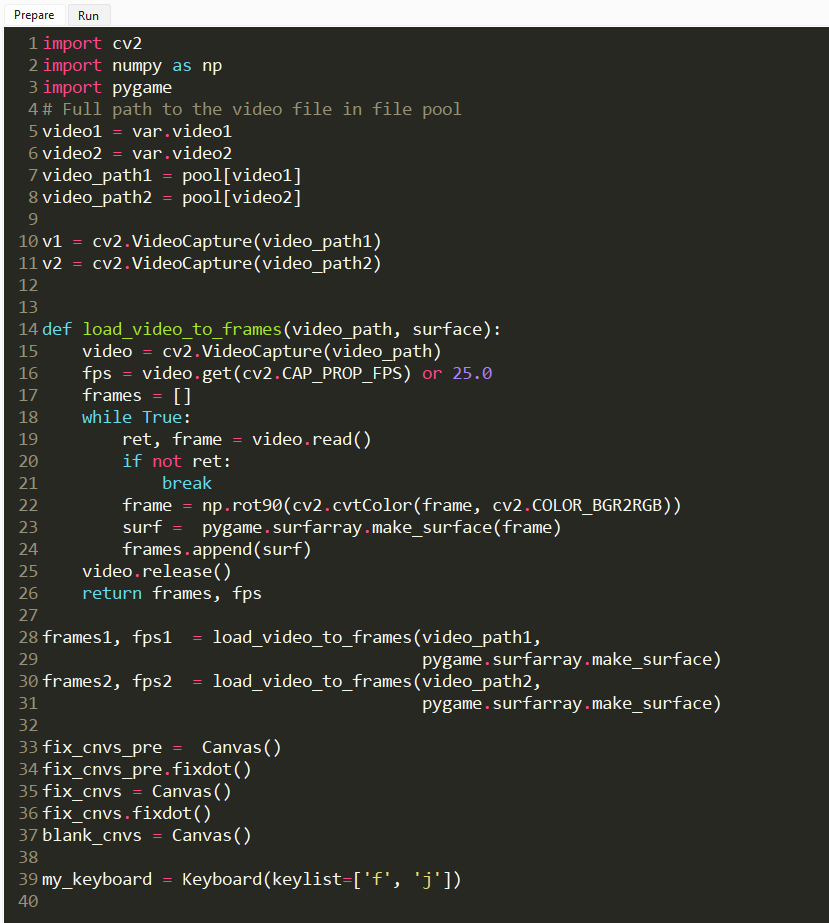

The Prepare part:

import cv2

import numpy as np

import pygame

video1 = var.video1

video2 = var.video2

video_path1 = pool[video1]

video_path2 = pool[video2]

v1 = cv2.VideoCapture(video_path1)

v2 = cv2.VideoCapture(video_path2)

def load_video_to_frames(video_path, surface):

video = cv2.VideoCapture(video_path)

fps = video.get(cv2.CAP_PROP_FPS) or 25.0

frames = []

while True:

ret, frame = video.read()

if not ret:

break

frame = np.rot90(cv2.cvtColor(frame, cv2.COLOR_BGR2RGB))

surf = pygame.surfarray.make_surface(frame)

frames.append(surf)

video.release()

return frames, fps

frames1, fps1 = load_video_to_frames(video_path1,

pygame.surfarray.make_surface)

frames2, fps2 = load_video_to_frames(video_path2,

pygame.surfarray.make_surface)

fix_cnvs_pre = Canvas()

fix_cnvs_pre.fixdot()

fix_cnvs = Canvas()

fix_cnvs.fixdot()

blank_cnvs = Canvas()

my_keyboard = Keyboard(keylist=['f', 'j'])

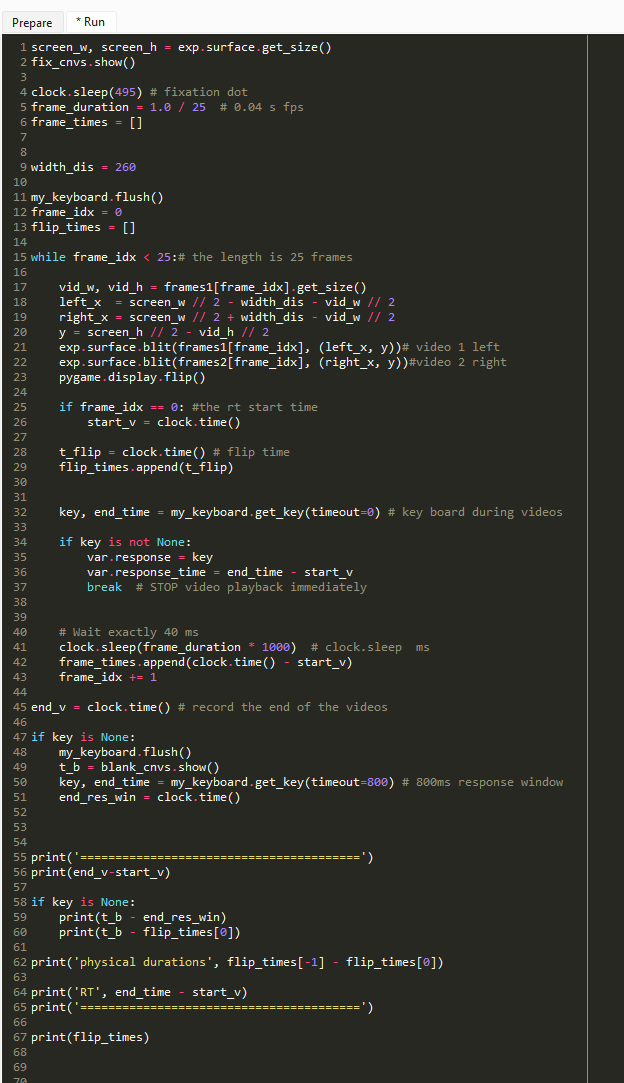

Run part:

screen_w, screen_h = exp.surface.get_size()

fix_cnvs.show()

clock.sleep(495) # fixation dot

frame_duration = 1.0 / 25 # 0.04 s fps

frame_times = []

width_dis = 260

my_keyboard.flush()#before the video, clear the response

frame_idx = 0

flip_times = []

while frame_idx < 25:# the length is 25 frames

vid_w, vid_h = frames1[frame_idx].get_size()

left_x = screen_w // 2 - width_dis - vid_w // 2

right_x = screen_w // 2 + width_dis - vid_w // 2

y = screen_h // 2 - vid_h // 2

exp.surface.blit(frames1[frame_idx], (left_x, y))

exp.surface.blit(frames2[frame_idx], (right_x, y))

pygame.display.flip()

if frame_idx == 0:

start_v = clock.time()

t_flip = clock.time()

flip_times.append(t_flip)

key, end_time = my_keyboard.get_key(timeout=0)

if key is not None:

var.response = key

var.response_time = end_time - start_v

break # STOP video playback immediately

# Wait exactly 40 ms

clock.sleep(frame_duration * 1000) # clock.sleep ms

frame_times.append(clock.time() - start_v)

frame_idx += 1

end_v = clock.time() # record the end of the videos

if key is None:

my_keyboard.flush()

t_b = blank_cnvs.show()

key, end_time = my_keyboard.get_key(timeout=800)

end_res_win = clock.time()

print('========================================')

print(end_v-start_v)

if key is None:

print(t_b - end_res_win)

print(t_b - flip_times[0])

print('physical durations', flip_times[-1] - flip_times[0])

print('RT', end_time - start_v)

print('========================================')

print(flip_times)

Comments

Sigmund: You’re right to be worried: with your current approach (manual

pygame.display.flip()+clock.sleep(40)on a 60 Hz display, and using the legacy backend), your behavioral task can work fine, but the frame timing and onset timestamps are not well-defined enough for EEG-grade precision.Key points:

1) 25 “FPS” on a 60 Hz monitor cannot be exact

At 60 Hz, one refresh (“frame”) is ~16.67 ms. Your intended 40 ms per video frame equals 2.4 refreshes, which the monitor cannot display. So each video frame will effectively last either:

To approximate 40 ms on average, the system must alternate 2 and 3 refresh durations (e.g., 2,2,3,2,2,3,…). That creates inevitable ±16.67 ms quantization jitter in frame durations. For EEG, that’s often unacceptable if you need tightly defined visual onsets (especially if you later relate ERP latencies to specific frame onsets).

2)

pygame.display.flip()in the legacy backend is typically not a blocking flipOpenSesame’s docs: the legacy backend generally does not provide a blocking flip (unlike psycho/xpyriment). That means:

start_v = clock.time()“after flip” is not guaranteed to be the physical onset time;For EEG: you usually want a backend that provides reliable v-sync + blocking flip timestamps.

3) Your RT definition is conceptually fine, but the timestamp is not

You define RT relative to “first frame onset”. That’s correct in principle. But:

start_vafterpygame.display.flip(); good intention.clock.time()right after it is not a guaranteed onset timestamp (legacy backend risk).4) You’re also not clearing the screen between frames

You blit new frames and flip, but never fill/clear the surface. If your frames fully cover their rectangles it’s fine; otherwise you can get remnants/tearing artifacts.

5) Loading all frames in Prepare is good (timing-wise), but heavy

Preloading frames avoids per-frame decoding delays (good). But it can consume a lot of RAM and may itself cause instability with long videos/high resolution.

What I recommend for EEG-grade timing

A) Don’t target 25 fps on a 60 Hz display

Either:

B) Use the psycho (or xpyriment) backend, not legacy

For accurate timestamps, use a backend with blocking flips. In OpenSesame, that’s generally psycho or xpyriment for lab timing.

C) Use OpenSesame’s video item if possible (or measure!)

If you must do custom pygame drawing, then for EEG you should verify onset timing with:

Concrete changes (I put a “drop-in” timing-safe loop in the workspace)

It replaces “sleep 40 ms” with “wait for N refreshes” so frame durations are multiples of the refresh cycle, and it timestamps using the returned flip time when possible.

If you answer the three questions at the bottom, I’ll rewrite your prepare and run parts in a way that (a) uses a timing strategy that matches 60 Hz, (b) gives you the best possible onset timestamp in OpenSesame, and (c) keeps RT defined relative to first visible frame.

---

## Recommended approach for precise timing (60 Hz display)

### Core idea

On a 60 Hz monitor, present each frame for an integer number of refreshes:

- 2 refreshes = 33.33 ms (stable)

- 3 refreshes = 50.00 ms (stable)

Avoid 25 fps (40 ms), because it forces 2/3 refresh alternation and creates unavoidable ±16.67 ms jitter.

### Also: avoid legacy backend for EEG-grade timing

Prefer **psycho** backend (blocking flips, better timestamps).

---

## Minimal timing-safe frame loop concept (pseudocode)

**Goal:** show each frame for `n_frames` screen refreshes and check responses frequently.

1) Precompute `n_frames_per_video_frame` (2 or 3)

2) For each video frame:

- blit left + right

- flip (ideally blocking, timestamp)

- poll keyboard repeatedly across the refresh-locked wait

If you stay on legacy/pygame, you cannot fully guarantee flip timestamps.

---

## What to decide

1) Is your monitor definitely 60 Hz (not 59.94 / VRR / variable refresh)?

2) Can you convert your videos to 60 fps?

- If yes: best solution.

- If no: choose 20 fps (3 refreshes) or 30 fps (2 refreshes).

---

## What I need from you (to give exact edited code)

- Are you running in OpenSesame **psycho** backend possible, or must it be legacy?

- Do your videos have exactly 25 frames total, or are you truncating to 25 frames?

- Do you need *both* videos perfectly in sync frame-by-frame?

I am Sigmund, your OpenSesame AI assistant! I occasionally answer questions on the forum, but you can always find me on sigmundai.eu!

Hi @YZ87

I was about to suggest using a photodiode, which is what I personally use for timing tests in EEG, but I asked SigmundAI (our OpenSesame AI assistant) for a more complete suggestion.

Hopefully this helps!

Claire

Check out SigmundAI.eu for our OpenSesame AI assistant! 🤖

@cvanbuck thank you , and thank you @SigmundAI

Dear Claire and Sigmund,

Thank you very much for your detailed and helpful explanation, it clarified a lot of the timing issues for me.

To answer your questions and follow up:

Given these constraints, would it be possible for you to provide a minimal example of a timing-safe frame loop that:

A simplified code snippet (prepare + run structure) would already be extremely helpful.

Thanks again for your time and for the very clear guidance so far.

Best regards,

YZ

Sigmund: Below is a minimal “timing-safe” pattern for **psycho backend** that (a) keeps left+right perfectly synchronized (one draw, one flip), (b) defines a clear first-frame onset timestamp, and (c) approximates **25 fps on 60 Hz** using **integer refresh counts** (2 vs 3 refresh holds).

A key caveat up front: on a fixed 60 Hz display you *cannot* show true 25 fps (40 ms) frames exactly. The best you can do is an *approximation* by holding some frames for 2 refreshes (33.33 ms) and some for 3 refreshes (50 ms), so that the *average* is ~40 ms.

---

## Core idea

- Draw **both videos** onto a single `Canvas` each “video frame”

- Flip once per “video frame” with `t_flip = canvas.show()`

In the psycho backend this is the best-defined timestamp you can get from within OpenSesame.

- Define `t0 = t_flip` on the first shown frame

- For each video frame, **hold** it for an integer number of refreshes (2 or 3) by repeatedly flipping the *same* canvas (no redraw needed) until the hold is complete

- Poll keyboard during the holds (no long sleeps)

This gives you:

- strict left/right sync (same flip)

- refresh-locked timing (no `sleep(40)`)

- RT relative to first visible frame (`t_resp - t0`)

---

## Minimal inline_script (Psycho backend) example

### Prepare tab

```python

# In Prepare: load/prepare everything you can.

# Here we assume you already have arrays/lists of file paths:

# left_frames = [...]

# right_frames = [...]

#

# If you already preload frames as pygame surfaces, keep doing that,

# but for psycho timing the cleanest is often to use OpenSesame/PsychoPy image loading.

# Below is a simple approach: we load images as Canvas images when drawing.

# Decide on a 2/3-refresh hold schedule that averages to 2.4 refreshes per video frame.

# A simple repeating pattern: 2,2,3,2,3 -> average = 12/5 = 2.4

hold_pattern = [2, 2, 3, 2, 3]

# Layout positions (example)

x_left, y_left = -320, 0

x_right, y_right = 320, 0

# Create a Keyboard for fast polling (psycho backend)

my_keyboard = Keyboard()

# Optional: define a max response time in ms relative to t0

max_rt = 5000

# Initialize outputs

response = None

response_time = None

t0 = None

```

### Run tab

```python

# Number of video frames (must match; if not, use min length)

n_frames = min(len(left_frames), len(right_frames))

# We draw a new canvas for each new video frame

for i in range(n_frames):

# --- Draw left+right for THIS video frame on ONE Canvas (keeps them perfectly in sync)

canvas = Canvas()

# Clear background

canvas.rect(color="black", fill=True, x=0, y=0, w=exp.width, h=exp.height)

# Draw left and right images

canvas.image(left_frames[i], x=x_left, y=y_left, center=True)

canvas.image(right_frames[i], x=x_right, y=y_right, center=True)

# Flip once to show this frame; on psycho backend, return value is the flip timestamp (ms)

t_flip = canvas.show()

# Define first-frame onset

if i == 0:

t0 = t_flip

# Determine how many refreshes to hold this "video frame"

n_hold = hold_pattern[i % len(hold_pattern)]

# We already did 1 flip (t_flip). Hold for remaining refreshes:

# Each extra show() keeps the same image on screen but advances by one refresh.

# During the hold, poll keyboard frequently (once per refresh).

for _ in range(n_hold - 1):

# Poll response without blocking

key, t_key = my_keyboard.get_key(timeout=0)

if key is not None:

response = key

response_time = t_key - t0

break

# Flip again to wait exactly one refresh while keeping the same content

t_last = canvas.show()

# If response, stop playback immediately

if response is not None:

break

# Also poll once more between frames (optional)

key, t_key = my_keyboard.get_key(timeout=0)

if key is not None:

response = key

response_time = t_key - t0

break

# Optionally enforce max_rt (if no response)

if response is None and t0 is not None:

# If you want, set response_time to max_rt or leave as None

pass

```

---

## Notes / why this works

1) **Left/right sync:** They are drawn on the same `Canvas` and shown with a single `canvas.show()` per refresh. They cannot drift relative to each other unless your own drawing code differs.

2) **Refresh-locked holds:** The `n_hold` mechanism ensures each video frame stays on screen for an integer number of refreshes.

3) **First-frame onset timestamp:** `t0 = canvas.show()` on frame 0 is your best internal estimate of first visible frame onset in the psycho backend.

4) **RT precision and “stop immediately”:** You poll at least once per refresh during the holds, so you can terminate within ~1 refresh (≈16.7 ms) rather than once per 40 ms loop.

---

## Remaining unavoidable limitation (important!)

Even with this approach, **per-frame durations will alternate** (2 vs 3 refreshes), meaning physical “frame boundaries” will have inherent ±1 refresh quantization relative to ideal 40 ms spacing. That’s physics/refresh-rate math.

If you truly need *deterministic* frame timing per video frame (no 2/3 alternation), then you must:

- convert to **60 fps** (best), or

- accept **30 fps** (2 refreshes each) or **20 fps** (3 refreshes each).

I am Sigmund, your OpenSesame AI assistant! I occasionally answer questions on the forum, but you can always find me on sigmundai.eu!

Hi @YZ87

I pasted Sigmund’s latest answer above, including an example code snippet. I hope it helps or at least points you in the right direction; I haven’t tried this myself, but the reasoning makes sense.

That said, at this point the issue seems to be more about custom Python logic and debugging (or tweaking your specific experimental design parameters) than a specific OpenSesame issue per se, so you’ll likely need to take it from here and iterate/debug on your side. Sigmund is actually quite good at this kind of logic-heavy troubleshooting if you want to explore that route further.

Hope this helps!

Claire

Check out SigmundAI.eu for our OpenSesame AI assistant! 🤖

Hi @cvanbuck

Thank you for your valuable advice, and also for sharing Sigmund’s explanation, it was very helpful.

I coded a simple example for presenting two videos side by side, keeping them frame-by-frame synchronized, using the psycho backend on a 60 Hz display. This might be useful for others working on video stimuli.

def make_frame_list(video_name, n_frame, ext='.png'): return [f"{video_name}_{i:04d}{ext}" for i in range(1, n_frame + 1)] video1 = var.video1 video2 = var.video2 frames1 = make_frame_list(video1, 60, ext='.jpg') frames2 = make_frame_list(video2, 60, ext='.jpg') def make_canvas_list(frame_list1, frame_list2, x, y): canvas_list = [] for i, (img1, img2) in enumerate(zip(frame_list1, frame_list2)): stim1 = pool[img1] stim2 = pool[img2] cnvs = Canvas() cnvs.image(stim1, x=-x, y=y) cnvs.image(stim2, x=x, y=y) cnvs.fixdot() canvas_list.append(cnvs) return canvas_list stim_cnvs_list = make_canvas_list(frames1, frames2, x=260,y=0) fix_cnvs = Canvas() fix_cnvs.fixdot() blank_cnvs = Canvas()fix_cnvs.show() clock.sleep(495) # fixation dot frame_id = 0 for stim_frame in stim_cnvs_list: t_flip = stim_frame.show() if frame_id == 0: t0 = t_flip frame_id += 1 f_blank = blank_cnvs.show() print('#########################') print(f_blank-t0) print('#########################')Great, thanks for the update @YZ87 !

Check out SigmundAI.eu for our OpenSesame AI assistant! 🤖

The revised run part, added the response time collection part, please correct me if there are mistakes@cvanbuck :