Bayesian post-hoc tests for factorial ANOVA

Hi All,

I can't decide if I'm missing something conceptually or whether this just hasn't been implemented in JASP yet (v 0.14.1).

If one has a 2 x 2 between subjects design, one might be interested in conducting post-hoc tests that follows up on the interaction. An example 2x2 ANOVA dataset would be attached, but I get a 413 error when I try to attach it. The variables of interest are an individuating cue (a cue about whether a specific statement is a lie or truth) and context-general information (whether the participant believes the base rate is biased towards the presence of lies/truths) and the DV is the proportion of truth judgments made by participants (PTJ).

Ordinarily I would have a planned comparison here: When reliable honesty cues (a level of individuating cue) are available, there will be high PTJ, but when they are weak the decision depends on the context (higher PTJ when belief is that most people will tell the truth). But I'm trying to understand more about conducting post-hocs in JASP for didactic reasons.

I see that post-hoc tests are conducted for main effects but not for the interaction. There might be interest in assessing whether, when a weak honesty cue is present, there is evidence to update toward the alternative of an effect of context.

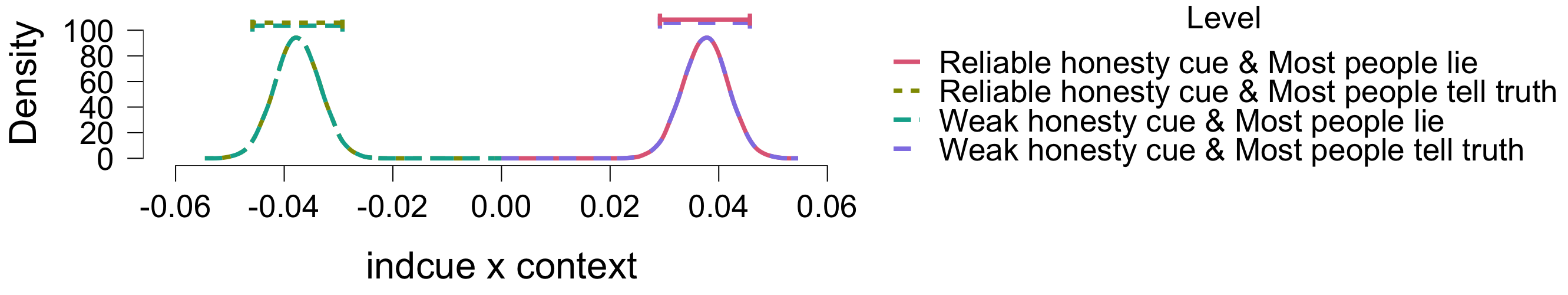

There is an option for plotting the model averaged posteriors that gives 95% CIs for each of the four cells of the design, but I'm guessing that these posteriors are not based on models that used the null control of their priors discussed in other threads/the de Jong pre-print (https://psyarxiv.com/s56mk) (Plus I'm not sure what is on the x-axis of this plot).

Any guidance would be very helpful. Thanks in advance,

Chris

Comments

Hi Chris,

If you want, you can also send the data to j<dot>b<dot>vandoorn<at>uva<dot>nl, that way I could take a closer look at it. From the plots you posted it seems that reliable cue + most truth and weak cue + most lie yield highly similar effect sizes, and the same goes for reliable cue + most lie and weak cue + most truth. The x axis in this case lists the effect size for that specific cell of your design.

You could also create dummy variables to test 2 specific cells against each other. You can use the create column functionality, and either use R or the graphical editor. Then you can perform t-tests to test for an effect. The drawback here is that the prior model odds won't be corrected for multiple comparisons, which is the case in the ANOVA.

Does this clarify things?

Kind regards

Johnny

Hi Johnny,

Thanks for the reply, I really appreciate it. This is pretty much what I thought: there is no correction implemented yet (admittedly I don't understand enough to know whether that's a statistical or logistical issue). I'm able to interpret the data as is and to do some form of contrast coding in R, for instance, but it was more a didactic issue around a correction for the interaction. Thanks for the response and offering to look at the data set

Chris

Sorry, to add to that - you've mentioned that the graph is capturing the effect size. I don't think this is partial eta squared because I've seen elsewhere on the forums values that exceed one (I don't remember where sorry). The raw values of this data set range between 0 and 1.00, and a posterior belief of 0.04 would not be plausible on the raw scale of the data (where for the truth cue + truth background condition we can expect values closer to 0.80). Given the symmetry, I figured this might be the coefficient in the general linear model?

C