weird bias in intercept estimation in JASP

Hi Everyone,

I'm teaching statistics with JASP. I made a small notebook where we recover y = a + b*group with frequentist and Bayesian linear regression (using dummy coding). The frequentist estimation is fine, but the intercept in the Bayesian linear regression is very biased. I played with the priors, and tried to re-sample - this keep happening.... Anyone please?

I'm attaching the notebook and a few screenshots.

Thank you,

Nitzan

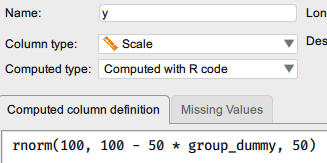

How the dep is computed:

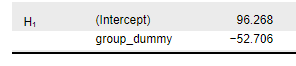

Frequentist regression is fine:

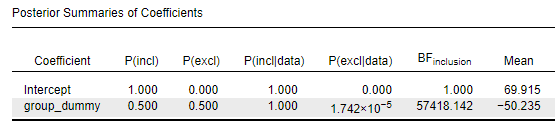

Bayesian regression always show a very biased intercept:

Comments

Hi Nitzan,

The Bayesian model uses a centered design matrix. So rather than y ~ intercept + b*x it does y ~ intercept * b * (x - mean(x)). This does not affect statistical significance but it does change the interpretation of the parameters. Specifically, the intercept models the overall mean of y instead of that of the reference category. If I center group_dummy with the R code `group_dummy - mean(group_dummy)` I get very similar results:

Hope that helps!

Don

the difference between -29.402 and -24.435 is still very large no?

That's expected shrinkage due to both the parameter prior and the model prior. If you pick the priors "just right" then you can make the two approaches produce more or less identical results:

Note, however, that this is a terrible thing to do. The shrinkage in the Bayesian parameter estimates reflects the uncertainty in the models and the possibility that the effect is absent. With the original more sensible prior setting we roughly get 0.908 * 29.402 + 0.1 * 0.0 = 26.697. The remaining shrinkage is due to the prior on the parameter.