sampling a nested loop without replacements

Hi all,

I tried to be brief, and failed ![]() . Hopefully it's readable and someone would be able to help.

. Hopefully it's readable and someone would be able to help.

I will really appreciate it a lot!

I'm designing an artificial grammar learning (AGL) task in which participants see a sequence of 3-10 letters

and need to type them at the end.

The sequences are taken from a pool, and selected randomly for every participant.

For example, say that I have 120 sequences from every length of sequence (e.g., 120 sequences of 3 letters; 120 sequences of 4 letters, etc., and each participant see 20 different sequences of 3, 20 different sequences of 4, etc.).

The different length sequences should appear randomly (i.e., not first a sequence of 3 letters, and then a sequence of 4, etc.). It is important that a participant will not see the same sequence more than once.

Right now, I have a main loop (indicating what the length of the sequence will be),

and nested loops (a loop for every sequence length), that run if the condition for running it occur (more details in the attached file).

The nested loop breaks after one trial and going back to the main loop, and in the new trial the random sampling starts again, creating sampling with replacement that is problematic in this design.

Is there a way to sample without replacement? for example, deleting a line in the loop that was already executed?

Thanks for reading!

Looking forward to your responses,

Tali

Comments

Hi Tali,

One option would be to set the inner loop's Break-if statement to always, and uncheck the Evaluate on first cycle option. That way the inner loop will only run for one cycle. Then you can check the Resume after break option so that the inner loop will pick up where it left off when it is called again by the outer loop. This will implement random sampling without replacement. Does that make sense?

I'm not entirely sure whether nested loops are even necessary here, or whether you'd also be able to implement this through clever use of advanced loop operations. (Maybe, maybe not—it's not obvious to me.)

Cheers!

Sebastiaan

Check out SigmundAI.eu for our OpenSesame AI assistant!

Hi Sebastiaan

Thanks for your response!

You are right, I didn't need any nested.

I designed it now with advanced loop options and I checked the "resume after break" option.

Now for some reason it crushes in the middle of the experiment (Every time),

and the sampling is still with replacement.

Do you have any idea why?

Thanks a lot again,

Tali

No because you haven't provided any error message!

Check out SigmundAI.eu for our OpenSesame AI assistant!

There was no error message, just that the experiment stopped, but I attached the file

Thanks!

Taku

It will always say something when the experiment ends, even if you don't see a detailed stack trace. For example, it may say that Python crashed, or that the experiment ended successfully.

Check out SigmundAI.eu for our OpenSesame AI assistant!

ok that's weird. Today it worked without crashing.

I'm attaching the file if anyone will want to use it as a template for an AGL task.

Thanks!

Tali

Sorry to do it again, but it crashed without any changes.

It ran twice without any problem but crashed in the third time.

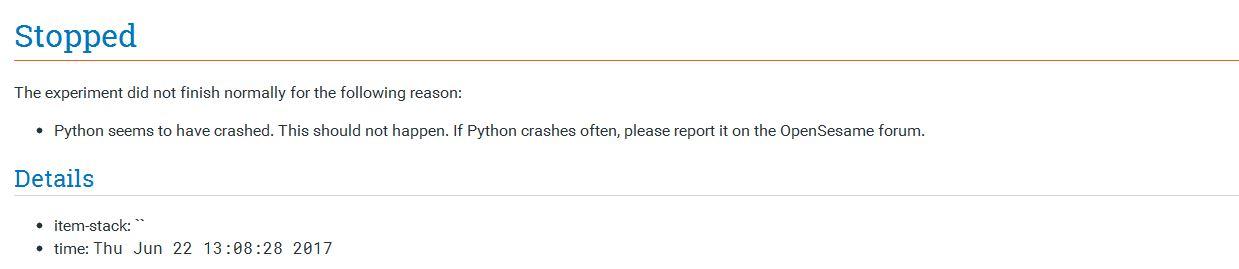

That's the message I got:

I was afraid of that. This means that the Python interpreter crashed. That's always a bug somewhere in the underlying libraries, not a problem in your experiment, nor in OpenSesame (well, the underlying libraries are part of OpenSesame, in a sense, but not in the OpenSesame code).

Can you find some system to the crashes? I.e. does it always crash at the same moment of the experiment? And do other experiments using the legacy backend crash as well on this computer?

Check out SigmundAI.eu for our OpenSesame AI assistant!

I ran it on two different computers, one running windows 7 and one running windows 10.

When it crashed, it did so after different number of trials. So far I didn't have any other problem

with other experiments...

it does happen after I run the experiment for 3 or 4 times one after another

Ok, so then there's at least one obvious workaround: Close and restart OpenSesame after running an experiment.

This sounds like there are carry-over effects from one experiment to the next, which in turn suggests that the inprocess runner is used (which doesn't start a new process for each experiment). Is that correct? If you go to Menu → Tools → Preferences, can you change the Runner to Multiprocess, or is this already the case?

Check out SigmundAI.eu for our OpenSesame AI assistant!

Hi Sebastiaan

It's already on Multiprocess