Delay between parallel port trigger and sound.

Hi everyone,

We are switching our EEG lab's installation to Python.

We are mainly interested in recording EEG activity during presentation of sounds. However, we have a still unsolved problem concerning the delays between the triggers sent to the recorder and the actual starting time of the sounds : we don't manage to have millisecond accuracy.

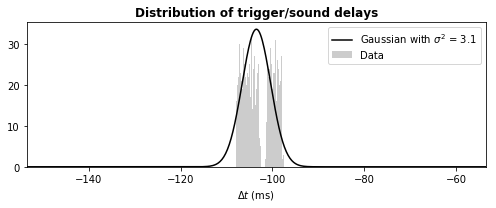

The distribution of delays looks like that :

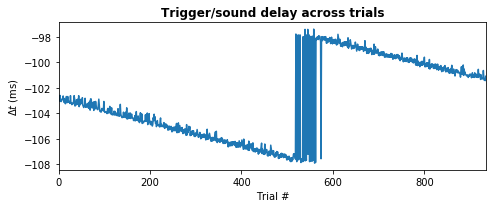

The jitter is definitely not < 1 ms, it is more around 3 ms. Furthermore, this is a weird looking bimodal distribution ... And it gets even weirder when you look at the delays individually, trial by trial :

We have no explanation for this pattern. Do you have any ideas on why we see this trend across trials ? It kinda looks like an aliasing pattern for me, like if somehow two clocks were running at slightly different speeds and slowly desynchronzying ...

Here are the details :

1. We record the delays between the triggers and the sound using a small electrical montage that take (a) the actual sound output as the left channel and (b) the parallel port output pin as the right channel. The two signals are the two channels of a jack cable that is plugged to another computer that records.

2. We are using Expyriment and the xpy.stimuli.Audio.present() method. We have also tried pyAudio with the stream.write(data) method.

3. We have disable synchronization with the screen (xpy.control.defaults.open_gl=False).

4. We have tried to launch the trigger before and after the xpy.stimuli.Audio.present() method. It doesn't affect the results.

Thanks a lot for your help !

Comments

Hi there,

as described in our paper, as well as in our documentation page, audio accuracy is not ms accurate and can be delayed quite a bit, as you have experienced, depending on a variety of factors. This will be the case with any audio setup to some degree. You can try to decrease the buffer size in order to improve the timing a bit. PyAudio does also help a bit (in fact we will switch our audio system to that in the future). But none of that will get you in the range of 1 ms. Even studio-grade audio setups don't get there.

The delay, however, might not be so problematic, if you know it and if it doesn't jitter too much. In fact, a jitter of 3 ms is very good (even better than we measured in our tests).

If this is not sufficient, our common suggestion for ms accurate and stable audio presentation is to use an external device (e.g. a sampler), that is triggered via MIDI.

Thanks for your quick reply,

Yes you are right pyAudio is a bit better and decreasing the buffer size helps a lot. However, when using the same hardware and same computer, but running Presentation (Neurobehavioral Systems), we actually achieve very good timing (latency around 10 ms and jitter around 1ms). Apparently, it may come from the fact that Presentation can take over some background tasks and control the soundcard at a low level. But you are right, if I don't manage to solve the problem, I will definitely rely on an external device and MIDI. Thanks !

Yes, 10 ms latency and 1 ms jitter are generally possible on Windows (in my music production PC I have an even lower latency). Especially when using proper ASIO drivers (which are the standard in the pro audio world). I believe that PyAudio can access ASIO devices, so that might be something to look into, but I am not sure how the performance is.

A second PC/laptop with an ASIO capable audio interface (or even better a Linux system with a JACK server and a real-time kernel) that is triggered from the stimulation PC might also be an alternative to an external device such as a sampler.

Ok thanks a lot, I'll try that !